Growing Excitement Around Generative AI in Healthcare

The ongoing market shift from using Convolutional Neural Networks (CNNs) to more general AI models known as Foundation Models is opening up new possibilities for AI application in clinical workflows. Narrow CNNs have limited applications, typically focused on identifying single or a few conditions, which ultimately has prevented providers from fully realizing the potential benefits of AI in clinical care to date. Foundation Models, especially Generative AI, have the potential for much broader impact, such as diagnostic interpretation for medical imaging, generating draft radiology reports to drive greater value and efficiency throughout the clinical workflow.

Bridging the Gap

A persistent challenge across the medical field is meeting the increasing demand for diagnostic exams and patient care with a workforce whose growth is not keeping pace. Many believe that AI can help bridge this gap by helping to enhance provider efficiency, but it is important for users to understand how these AI models perform in real-world healthcare settings.

Chest X-ray ChAIpter Challenge

Led by the American College of Radiology’s Data Science Institute

Event Description

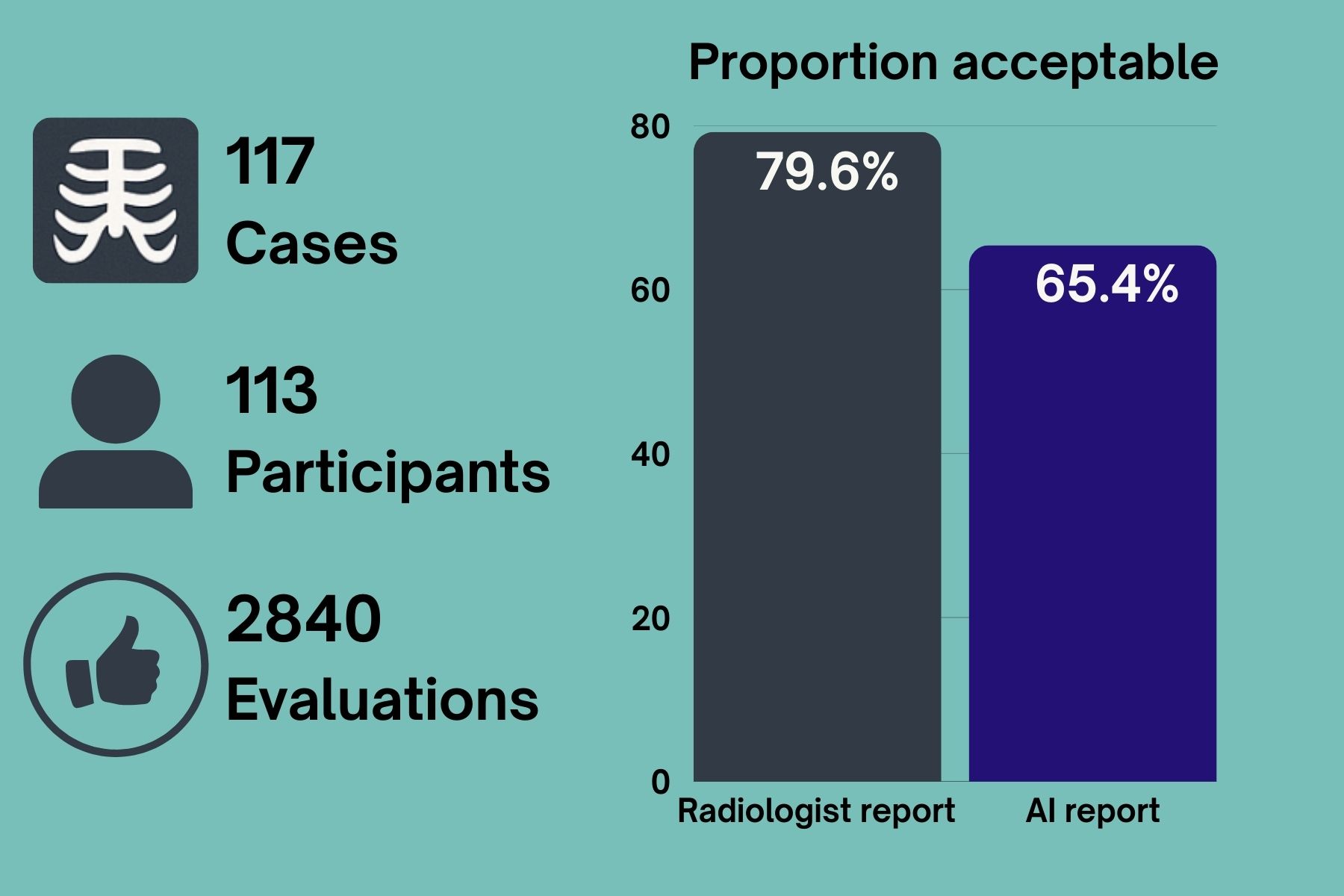

Participants invited to evaluate both AI-generated and radiologist-written chest radiograph reports to determine whether they are clinically accurate–before discovering whether they were created by a human or AI.

Objective

- Evaluate performance of a generative AI model in drafting a radiology report

Methodology

- Participants evaluate the radiology report and cast a vote whether they consider the radiology report clinically acceptable (Yes/No)

- After casting the vote, the source of the report (human vs AI) is displayed

Data

- Chest X-rays represent real-world data (retrospective clinical cases with corresponding radiology report created by a radiologist) sourced by Healthcare AI Challenge founding members

- All data is deidentified and includes diverse mix of normal and abnormal and acquisition parameters

Participants

- Participants of the ACR Annual Meeting May 3-7 2025 (radiologists, members-in-training) across different ACR chapters nationwide using either mobile or desktop devices

Key Takeaways

- With over 120 million radiography (X-ray) exams performed globally each year1, X-ray represents the largest driver of medical imaging workload, which makes it an ideal initial use case to evaluate generative AI performance to support radiology workflows

- AI performance in this event indicates that when supervised by qualified medical experts, it may provide a useful starting point for report generation that can in turn optimize radiology throughput

- Inter-radiologist discordance in chest X-ray interpretation demonstrated in this event aligns with numerous past studies (see references 2-8) which have highlighted significant variation —even among experienced clinicians—with only fair to moderate agreement depending on clinical setting, time of day, and radiographic findings

Manuscript

Coming soon

References

-

- GE HealthCare, “Driving Consistency and Increasing Efficiency in X-ray IQ,” April 20, 2023 https://www.gehealthcare.com/insights/article/driving-consistency-and-increasing-efficiency-in-xray-iq.

- Pediatric Chest Radiograph Interpretation in a Real-Life Setting. Rotem-Grunbaum B, Scheuerman O, Tamary O, et al. European Journal of Pediatrics. 2024;183(10):4435-4444. doi:10.1007/s00431-024-05717-x.

- Assessment of the Comparative Agreement Between Chest Radiographs and CT Scans in Intensive Care Units. Brooks D, Wright SE, Beattie A, et al. Journal of Critical Care. 2024;82:154760. doi:10.1016/j.jcrc.2024.154760.

- Interobserver Agreement in Interpretation of Chest Radiographs for Pediatric Community Acquired Pneumonia: Findings of the pedCAPNETZ-cohort. Voigt GM, Thiele D, Wetzke M, et al. Pediatric Pulmonology. 2021;56(8):2676-2685. doi:10.1002/ppul.25528.

- Variation Between Experienced Observers in the Interpretation of Accident and Emergency Radiographs. Robinson PJ, Wilson D, Coral A, Murphy A, Verow P. The British Journal of Radiology. 1999;72(856):323-30. doi:10.1259/bjr.72.856.10474490.

- Interobserver Reliability of the Chest Radiograph in Community-Acquired Pneumonia. PORT Investigators. Albaum MN, Hill LC, Murphy M, et al. Chest. 1996;110(2):343-50. doi:10.1378/chest.110.2.343.

- Measuring Performance in Chest Radiography. Potchen EJ, Cooper TG, Sierra AE, et al. Radiology. 2000;217(2):456-9. doi:10.1148/radiology.217.2.r00nv14456.

- Variability in Interpretation of Chest Radiographs Among Russian Clinicians and Implications for Screening Programmes: Observational Study. Balabanova Y, Coker R, Fedorin I, et al. BMJ (Clinical Research Ed.). 2005;331(7513):379-82. doi:10.1136/bmj.331.7513.379.